Evolution of Application Hosting

In the earlier days of computing, each application was typically hosted on its own dedicated server. Each server came with its own hardware components, including a central processing unit (CPU), memory, storage, and network equipment, leading to increased expenses for organizations. But this posed significant challenges in terms of cost and resource management as dealing with the distinct resources of each server became a complex task, making it difficult to efficiently manage both the resources and costs associated with each server.

To solve this problem, VMware, a leading company in virtualization technology, played a significant role in introducing virtual machines (VMs).

What is Virtual Machine?

A virtual machine is like a computer that doesn't physically exist but resides within another real computer. Imagine running different operating systems on a single computer. For example, you have a windows machine, and you want Ubuntu, Macintosh, Linux or any other version of windows in your existing computer without using any extra hardware components like CPU and networking devices for each of those machines. This is when virtual machines(VMs) come into action. VMs are created and managed by virtualization software like VMWare, Oracle VirtualBox allowing multiple VMs to run on a single physical machine. Then this VMs are allocated individual hardware resources from the host machine. This technology enhances resource utilization, flexibility, and scalability in computing environments.

Containers

When dealing with virtual machines (VMs), one major challenge arises when sharing applications. For instance, if you create an application and share it with a friend for testing, they may encounter issues because of missing packages, different module versions, or other dependency-related issues. VMs have limitations when it comes to portability and ensuring consistent environments between different systems. To eliminate the hassle of varying dependencies and configurations, we use containers. When we share a container, you're essentially bundling all the necessary components—database, frontend, backend, dependencies, source code—into a single package. This container can then run seamlessly on your friend's computer, ensuring that the application works consistently across different environments. In a nutshell, containers offer a simple and consistent way to bundle and share applications, making collaboration and deployment smoother by avoiding the challenges of VMs.

Difference between VMs and Containers

VMs use hardware virtualization, and containers use OS virtualization. In simple terms, virtualization creates virtual versions of things like operating systems, servers, or storage. Containerization is a lighter form of virtualization.

What is Docker?

Docker is a containerization platform, enabling the rapid development, testing, and deployment of applications. Developers specify the application and its dependencies in a Dockerfile, from which Docker generates images defining Docker containers. This ensures the application's consistency and reliability across various environments. To install, refer : docs.docker.com

Runtime

Runtime is a crucial component responsible for executing and managing containers. runc serves as the container runtime interface, responsible for starting and running the processes inside containers. runc is used to launch the actual container process and manage its lifecycle. It's like the engine inside a car. A more robust manager called containerd handles all aspects of containers, including handling images and starting and stopping containers. It handles every tiny detail that a driver does, allowing you to focus on achieving your objective. When combined, they make a powerful combination that offers a dependable and effective method for managing and running containers.

Docker Engine

Docker uses a client-server architechture. Docker Engine is responsible for building, running, and managing Docker containers. The engine includes a server daemon called the Docker daemon, a REST API that specifies interactions with the daemon, and a command-line interface (CLI) that communicates with the daemon which is also known as Docker Client.

Docker Daemon listens for Docker API requests being made through the Docker client and manages container operations such as building, running, and stopping containers.

Orchestration

It refers to the automated coordination and management of various tasks involved in deploying, scaling, and maintaining containerized applications. When dealing with containers, orchestration tools, such as Kubernetes, take charge of processes like container deployment, scaling based on demand, network setup, load balancing, health monitoring, if needed.

For example, imagine a toy store where kids want to play. Instead of one big room, we have small play areas (containers) for each group of toys. Just like at a big party, we create more play areas for more kids and fewer for a smaller gathering. This is similar to how computers use containers to run apps, keeping them organized. Orchestrating the play is like having helpers who set up play areas, decide how many toys in each, and plan based on the number of kids—smart planning to ensure everything runs smoothly. In the computer world, orchestrating containers is like using programs to plan where apps should run, how many, and adjusting as needed for a well-organized setup.

Docker/ Container image and Dockerfile

A Docker image is a complete package for a computer program, containing everything it needs to run. It's created from instructions in a Dockerfile and serves as a portable template. These images can be shared and used to create containers—live instances of the program—making it easy to run applications consistently across various environments.

So, a Docker image is like a special recipe card for computer programs. It tells the computer exactly what it needs to run a specific program, just like your recipe card tells you how to make your favorite dish. And when you want to use that program, the computer follows the instructions from the recipe card and creates a little cooking space called a "container" where the program can run happily. Docker makes sure everything is organized and runs smoothly, just like following your recipe to make a delicious meal!

Images are constructed using layers, where each layer represents an immutable file. These layers consist of a set of files and directories.

Docker Registry

A Docker registry is a system for storing and distributing Docker images. Docker Hub is a public registry, and private registries offer organizations control over image access and security.

Some basic command

To run an image, use

docker run image_nameordocker run -it image_nameso that it doesn't exits itself.To download an image,

docker pull image_namedocker imageshows all the images downloaded.docker containerls shows all the container anddocker psshows all the images running.docker container exec -it unique_container_id bash. It basically attach a interactive bash shell into the container.To stop a container we can use

docker stop container_name/idand to remove a containerdocker rm container_name/idTo get all the informtion about the container,

docker inspect container_iddocker logs container_name/idto view the logs generated by a running Docker container.docker container prune -fused to remove all stopped containers.docker rmi image_name -fremoves the imagedocker commit -m "message" container_name/id new_image_nameused to create a new image from changes made to a container.docker images -qshows all the images unique idsdocker rmi $(docker images -q)removes all the images.

Create docker image

- Create a folder and inside it create a file named "Dockerfile" as by default on building docker searches for this name.

FROM ubuntu #the base image

RUN apt-get update

CMD [“echo”, “Hello World”] #the cmd will run when the container start

We can create the image by

docker build -t image_name path_of_the_dockerfileUse

docker run image_nameafter which CMD section of Dockerfile will get executed when you create a container out of the image.To push it in Docker Hub so that anyone can access it login into docker using

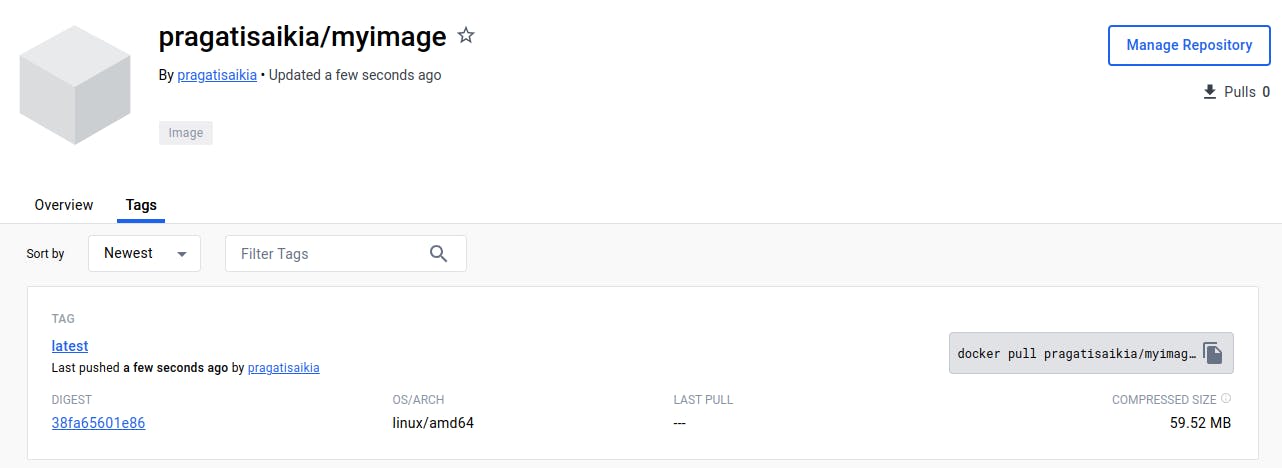

docker loginin the CLI.Before pushing the image, you need to tag it with your Docker Hub username and the desired repository name . Here is an example :

docker tag myimage pragatisaikia/image-demo:latestmyimage is the local image name. pragatisaikia is my dockerhub username and image-demo is the image name I want to show publicly in dockerhub and latest is the tag.

Push the image using

docker pragatisaikia/image-demo:latest

Now you can see your image in dockerhub.

That's it for this article! Let learn and make the most out of it!